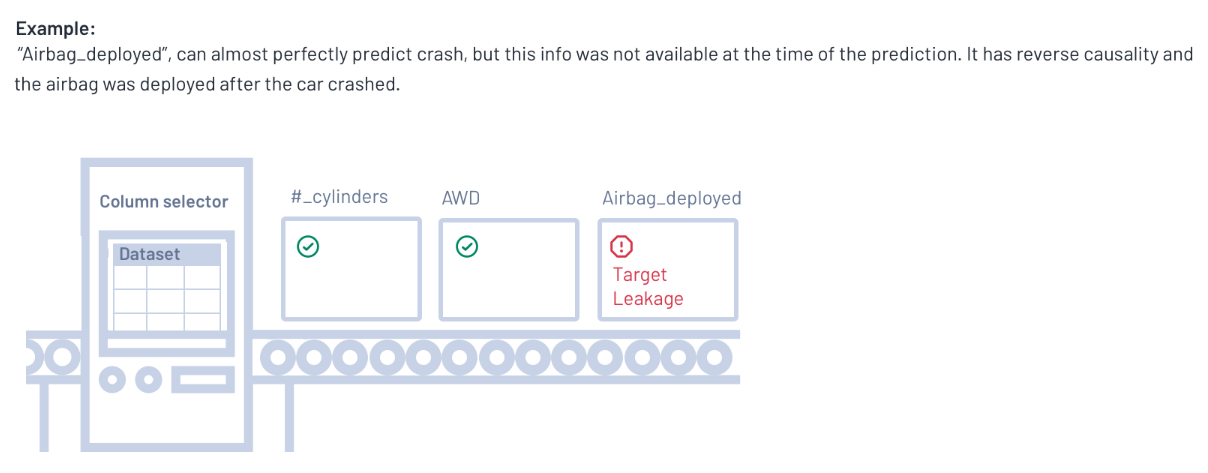

Target Leakage

Target leakage: Also known as data leakage. Target leakage occurs when a variable that is not a feature is used to predict the target. This occurs when the model is built, or trained, with information (known as the training dataset) that will not be available in unseen data. This is one of the causes of model error and results in a model that seems too good to be true. Target leakage occurs more often with complex datasets. For example, if your training dataset that was normalized or standardized using missing value imputation (such as min, max, mean) - such that the training dataset had full knowledge of the distribution of data in the training dataset. An unseen dataset would not have any knowledge of the distribution of data in the dataset - that is, a row in the unseen dataset would not know if there were three records or three million other records. It wouldn't be able to normalize or standardize itself using unseen data. As a result, the model ends up overfitting to the training data and produces a higher accuracy when run on unseen data than it would if the model had not fit the training dataset so well (too well).

Prevent target leakage

The following actions may help prevent target leakage:

- Cross validation - for time series this means selecting data points from your dataset and randomly assigning them to training and testing sets

- Create and keep a validation dataset for performing a final reality check later

Machine Learning Tools

Definitions for Machine Learning Tools

Steps in Assisted Modeling

Select Target and Machine-Learning Method

Select Target and Machine-Learning Method

Other Machine Learning Tools

One Hot Encoding Machine Learning Tool

Fit Tool Machine Learning Tool